08 Nov 2018

Language Identification is a method of determining the language given a string. There are numerous methods for language identification but in general, this task is categorized as a sequence labeling task since we are going to label a sequence and our labels are different languages. For instance, a method for language identification using neural networks can be seen at github.com/adelra/langident

Considering the fact that training a neural network and on top of that preparing the dataset for the network to train on, is a time-consuming task we will stick to existing methods.

Langdetect:

Langdetect is a very lightweight and fast library which detects languages instantly.

from langdetect import detect

>>> detect("I am bigger than you")

'en'

>>> detect("Bonjour, mademoiselle")

'fr'

The results from the language detector for Craigslist extracted dataset comes as below:

{'fr': 155, 'en': 5179, 'no': 65, 'et': 28, 'id': 52, 'af': 71, 'de': 321, 'da': 73, 'vi': 53, 'ca': 99, 'nl': 91, 'tl': 44, 'sv': 32, 'it': 118, 'so': 43, 'sl': 6, 'hu': 25, 'pt': 47, 'cy': 32, 'hr': 3, 'es': 67, 'sw': 7, 'ro': 64, 'fi': 6, 'pl': 19, 'tr': 17, 'lt': 9, 'lv': 8, 'sq': 5, 'zh-cn': 12, 'cs': 2, 'sk': 6}

Github gist:

08 Nov 2018

POS tagging an essential part in Natural Language Processing. There are numerous existing libraries for Python (e.g. NLTK) and other languages such as Java (e.g. Stanford POS Tagger). In this example, we use NLTK for pos tagging. At first, we import NLTK’s pos tagging library using selective import in Python. from nltk import pos_tag

Then, as usual, we open and read our text file.

fp = open("output_craigslist.txt", 'r') string_read = fp.readlines()

Note that here we have used readlines(). There are 3 different ways to read a file. FileObject.read() returns the whole stream of text. readlinne() accepts an input of the line number and returns the corresponding line and finally FileObject.readlines() returns a list containing all the lines in the FileObject. We will then iterate over the list and tokenize all the lines using String.split(" "). There are other methods to tokenize the text like using like using nltk.tokenize but for the ease of use, simplicity and running speed we stick to split method. After doing the split we want to remove empty (None) items in the tokenized form. Therefore, we use filter function over our tokenized items. line_tokenized = list(filter(None, line_tokenized)) In the end, we pos tag our lines and print tags that contain NNP tag which is the tag for Proper Nouns.

tagged = pos_tag(line_tokenized)

for i in tagged:

if i[1] == 'NNP':

print(i)

The outputs for Craigslist dataset are:

('Dammam', 'NNP')

('Lexus', 'NNP')

('LX', 'NNP')

('QR', 'NNP')

('Code', 'NNP')

('Link', 'NNP')

('Lexus', 'NNP')

('LX', 'NNP')

('United', 'NNP')

('Belgium', 'NNP')

('Following', 'NNP')

('Lexus', 'NNP')

('LX', 'NNP')

('Lexus', 'NNP')

('LX', 'NNP')

('Lexus', 'NNP')

Related Github gist:

02 Nov 2018

Introduction

In this post I decided to put on some tips and tricks and some hotfixes that I encounter while developing Neural Networks/Machine Learning projects. Most of these problems are easy to fix but a headache while working!

sparse_softmax_cross_entropy’s loss is NaN, what’s going on?

When sparse_softmax_cross_entropy’s loss is nan or going up, you’d have to use a lower learning rate. This NaN is due to the fact that your loss is going really up.

an example is shown below, CIFAR-10 dataset with CNN and 2 layer FC:

Iter 0, Loss= 22932.830078, Training Accuracy= 0.13000

Iter 0, Loss= 5908024.500000, Training Accuracy= 0.12000

Iter 0, Loss= 128835657728.000000, Training Accuracy= 0.18000

Iter 0, Loss= 16028694803369689088.000000, Training Accuracy= 0.12000

Iter 0, Loss= 392477918453616813397006876672.000000, Training Accuracy= 0.13000

Iter 0, Loss= nan, Training Accuracy= 0.06000

Iter 0, Loss= nan, Training Accuracy= 0.15000

Iter 0, Loss= nan, Training Accuracy= 0.13000

Data generator only returns same value

This might be the case that everytime you are calling the data generator you are calling the function itself:

def mygen():

for i in range(5):

yield i

Everytime you run next(mygen()) you will see the first item generated. What you have to do is to put mygen() into a variable and then call that variable.

>def mygen():

> for i in range(5):

> yield i

>gen = mygen()

>next(gen)

Out[16]: 0

>next(gen)

Out[17]: 1

>next(gen)

Out[18]: 2

>next(gen)

Out[19]: 3

>next(gen)

Out[20]: 4

>next(gen)

Traceback (most recent call last):

File "/usr/local/lib/python3.6/site-packages/IPython/core/interactiveshell.py", line 2961, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-21-6e72e47198db>", line 1, in <module>

next(gen)

StopIteration

Keras output layer shape error

Error when checking model target: expected

This error most times is due to the fact that the output layer expects data to be categorical one-hot vectors. For instance use: Keras.utils.np_utils.to_categorical

Regularization in RNNs

Using regularization in RNNs is not that common and mostly you’ll get worse results (according to Andrej Karpathy in cs231n)

Keras fit_generator

It always accepts a tuple:

- a tuple (inputs, labels)

- a tuple (inputs, labels, sample_weights).

12 Apr 2018

Big update: Google is now giving free TPUs in Colab! Awesome!

Google Colab is an awesome tool to use. It provides you with free GPUs for 12 hours. all this for FREE!

Colab uses VMs that connects to a Python Jupyter nootbook which provides you with the command line to enter the commands.

However, Using Google Colab is a bit tricky. First you have to create a Jupyter notebook in Colab.research.google.com

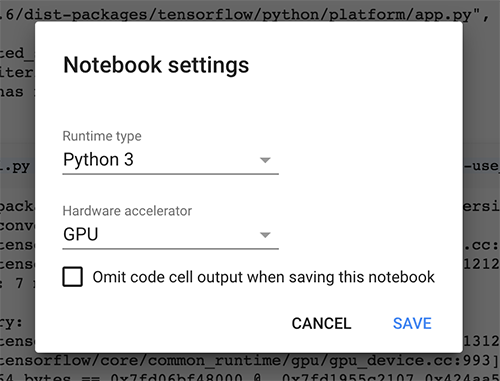

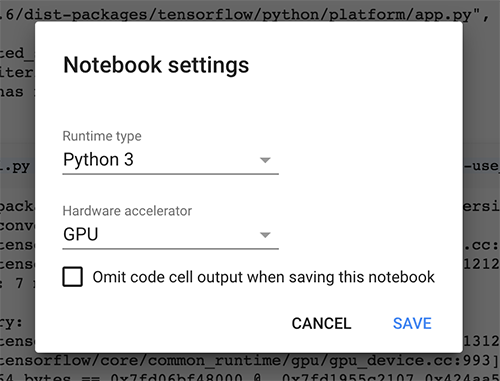

Now you have your Jupyter notebook. Go to edit -> notebook settings -> Hardware accelerator select GPU.

Google will provide you with Tesla K80 GPUs

To install all the necessary pacakges and add your google drive insert the code below and run. you’ll be prompt to enter a code from your google account twice:

!apt-get install -y -qq software-properties-common python-software-properties module-init-tools

!add-apt-repository -y ppa:alessandro-strada/ppa 2>&1 > /dev/null

!apt-get update -qq 2>&1 > /dev/null

!apt-get -y install -qq google-drive-ocamlfuse fuse

from google.colab import auth

auth.authenticate_user()

from oauth2client.client import GoogleCredentials

creds = GoogleCredentials.get_application_default()

import getpass

!google-drive-ocamlfuse -headless -id={creds.client_id} -secret={creds.client_secret} < /dev/null 2>&1 | grep URL

vcode = getpass.getpass()

!echo {vcode} | google-drive-ocamlfuse -headless -id={creds.client_id} -secret={creds.client_secret}

To install libraries you can do the following:

To check if you have GPU enabled and your TensorFlow recognizes your GPU run the below command:

import tensorflow as tf

device_name = tf.test.gpu_device_name()

if device_name != '/device:GPU:0':

raise SystemError('GPU device not found')

print('Found GPU at: {}'.format(device_name))

To mount your drive folder into your VM:

!mkdir -p drive

!google-drive-ocamlfuse drive -o nonempty

remember -o nonempty is for the time you are mounting your data from a nonempty directory.

To run a sample script enter:

!python main.py --data_path=drive/app/data/

If your VM was not resposive and you needed to restart it just follow the below command:

12 Apr 2018

Kaldi is one of the most Speech Recognition softwares written in C++. Installing Kaldi can sometimes be tricky hence, In this post I will explain how to install Kaldi in UNIX based operating systems.

First you need to clone the latest version of Kaldi from

This is the official Kaldi INSTALL. Look also at INSTALL.md for the git mirror installation.

[for native Windows install, see windows/INSTALL]

(1)

go to tools/ and follow INSTALL instructions there.

(2)

go to src/ and follow INSTALL instructions there.

cd tools:

To check the prerequisites for Kaldi, first run

extras/check_dependencies.sh

and see if there are any system-level installations you need to do. Check the

output carefully. There are some things that will make your life a lot easier

if you fix them at this stage. If your system default C++ compiler is not

supported, you can do the check with another compiler by setting the CXX

environment variable, e.g.

CXX=g++-4.8 extras/check_dependencies.sh

Then run

make

which by default will install ATLAS headers, OpenFst, SCTK and sph2pipe.

OpenFst requires a relatively recent C++ compiler with C++11 support, e.g.

g++ >= 4.7, Apple clang >= 5.0 or LLVM clang >= 3.3. If your system default

compiler does not have adequate support for C++11, you can specify a C++11

compliant compiler as a command argument, e.g.

make CXX=g++-4.8

If you have multiple CPUs and want to speed things up, you can do a parallel

build by supplying the "-j" option to make, e.g. to use 4 CPUs

make -j 4

In extras/, there are also various scripts to install extra bits and pieces that

are used by individual example scripts. If an example script needs you to run

one of those scripts, it will tell you what to do.

These instructions are valid for UNIX-like systems (these steps have

been run on various Linux distributions; Darwin; Cygwin). For native Windows

compilation, see ../windows/INSTALL.

You must first have completed the installation steps in ../tools/INSTALL

(compiling OpenFst; getting ATLAS and CLAPACK headers).

The installation instructions are

./configure --shared

make depend -j 8

make -j 8

Note that we added the "-j 8" to run in parallel because "make" takes a long

time. 8 jobs might be too many for a laptop or small desktop machine with not

many cores.

For more information, see documentation at http://kaldi-asr.org/doc/

and click on "The build process (how Kaldi is compiled)".

Remember that if your IAM data was downloaded incorrectly or your connection was not stable during the installation, remove all the data and download them from the beginning